202 – Streaming Log Data to MySQL on AWS

This course assumes you are familiar with the basics of Apache Nifi – Read more here

Follow along using our Auto-Launching Nifi on AWS – Learn how here

Streaming data into a MySQL database for later analysis and review is a common use case. Nifi is able to offer an end to end one stop solution from picking up the data to inserting it into your database. In this example we will start by tailing a log file, parsing the data, and landing it in an Amazon RDS (mySQL) database.

Start With the Template

There is a Nifi template that accompanies this demo. You can download it here and upload it into your own instance. Download the Template here [Click]

Configuring your database

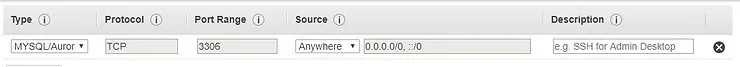

The AWS RDS configuration is mostly just out of the box. Even if you are not using RDS be sure that the database is accessible on port 3306 from the Nifi node. Typically the default security group allows this but your organization may have implemented different rules. Although it isn’t necessarily the tightest security practice, MySQL’s 3306 port can be open to the world as it is password protected. A production based database should have a tighter security group, potentially only white-listing the Nifi node itself.

Key attributes to note down for the database

-

The Endpoint

-

The Port (Default: 3306)

-

Target Schema

-

Target Table

-

Fields in Target Table

Mysql Security Group – optionally restrict the source

Calculated Systems is an AWS partner and can help you setup your environment end to end. If you have questions or would like help use the chat box in the lower right or email us at info@calculatedsystems.com

For our demo we are using

-

Endpoint: XXXXX.us-east-1.rds.amazonaws.com

-

Port: 3306

-

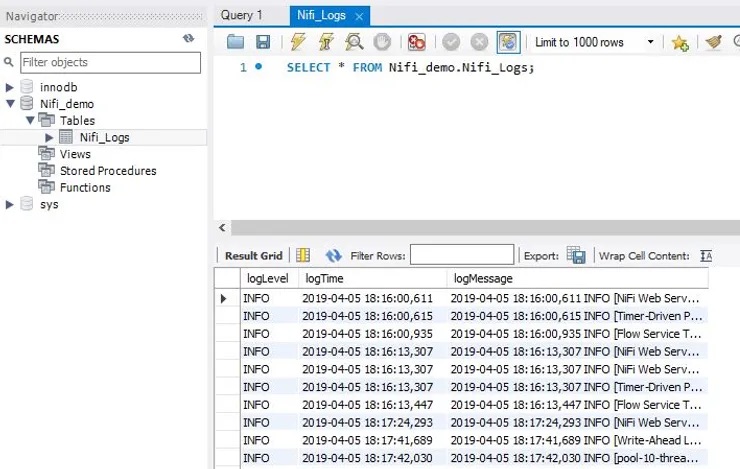

Target Schema: Nifi_demo

-

Target Table: Nifi_Logs

-

Fields: logLevel, logTime, logMessage

Configuring your MySQL driver

Depending on the version of MySQL you are integrating with you may need to download a specific driver. For RDS you may need to ssh into your Nifi node and run this one command. The rest of this example assumes this is the method used to acquire the connector. If you used an alternate method be sure to use a different class path.

-

sudo yum install -y mysql-connector*

Follow along with our Quick Start Nifi on AWS

-and-

Download the template and try it yourself

Setting up a pooled controller service

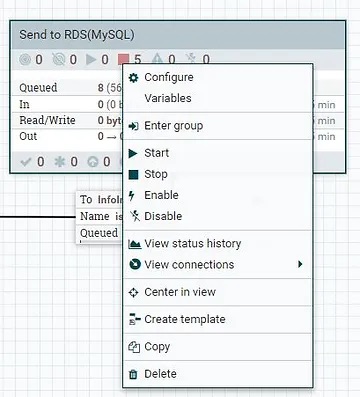

Nifi handles connection pools to databases to help ensure they do not get overloaded by multiple processors all at once. These connection pools are represented as a Controller Service. These controller services can live in a process group. In the template for this exercise(LINK TO TEMPLATE) the Controller Service ‘DBCPConnectionPool’ is part of the ‘Send RDS(Mysql)’ process group. You can view it by right clicking on the process group and going to configure

In the controller services tab you can configure the process group(gear icon) and go to its properties. Several attributes need to be configured in order to stream data to RDS. For our example we use

-

Database Connection URL: jdbc:mysql://xxxxx.us-east-1.rds.amazonaws.com:3306/Nifi_demo

-

Database Driver Class Name: com.mysql.jdbc.Driver

-

Database Driver Location: /usr/share/java/mysql-connector-java.jar

-

Database User: admin

-

Password: XXXXX

After configuring those variable hit ‘Apply’ and then the lightning bolt next to the gear to start the controller service

Using the Template

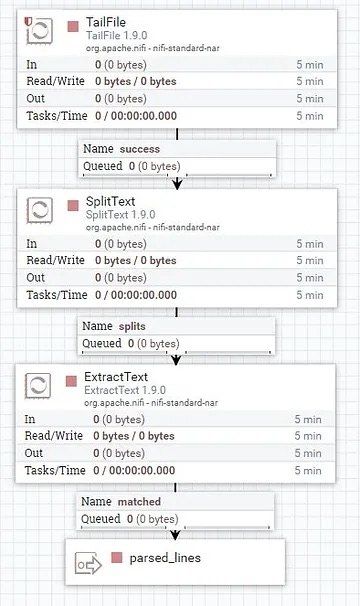

The template consists of two control groups and a routing processor This is a functional break down of the steps needed to stream data into MySQL

Retrieve & Parse

For a data source we are simply using the nifi-app.log as it is included in every installation of nifi. This log file has new lines appended to it regularly and provides an easy example of a typical tailing use-case. Every time Nifi detects a change it produces a flow file. This is then split into individual lines and parsed to extract the logLevel, logTime, and logMessage.

RouteonAttribute

This determines if a file is an ‘INFO’ event or not. All log levels except info are dropped at this stage

Send to RDS(MySQL)

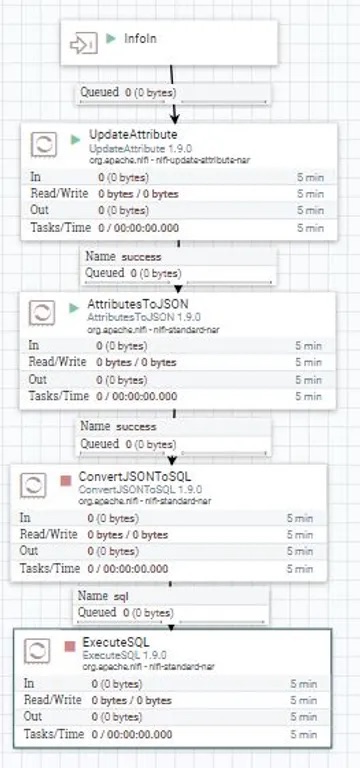

This processor group takes the previously extracted and parsed messages and lands it in RDS. The first processor, UpdateAttribute renames the relevant attributes to be on par with the target MySQL table. Next the AttributesToJSON processor formats it into an easy to read JSON message before it is converted to a SQL insert and finally landed in the database. This configuration is designed to add lines one at a time but depending on the requirements we could also batch the insert

Conclusion

Using Nifi is a quick, easy, and effective way to stream data into MySQL. The combination of drag and drop, real time processing, and flexibility make it ideal for data movement and event based processing.

Next Steps:

Learn more about moving data to the cloud